Functional Knowledge Transfer with Self-supervised Representation Learning

IEEE International Conference on Image Processing, 2023

Read Paper @ IEEE Xplore Read Paper @ Arxiv Source Code @ Github Watch Video @ Youtube Check Poster

Self-Supervised Learning (SSL) promises to reduce the need for labeled data, but its reliance on large-scale, unlabeled datasets limits its effectiveness in data-scarce environments. In such cases, SSL in its conventional form contributes little to improving performance. This project shifts the focus to overcoming these limitations by exploring novel approaches to make SSL work better with low data regime.

This work pioneers the shift from conventional representational knowledge transfer to functional knowledge transfer. We achieve this by designing a learning approach where self-supervised learning (SSL) and downstream supervised tasks are jointly optimized. This synergy reinforces both tasks, allowing SSL to contribute meaningfully even when data is limited.

This method jointly optimizes the contrastive pretraining with supervised learning objectives on small-scale datasets. By jointly optimizing these tasks, we significantly improve performance across various domains.

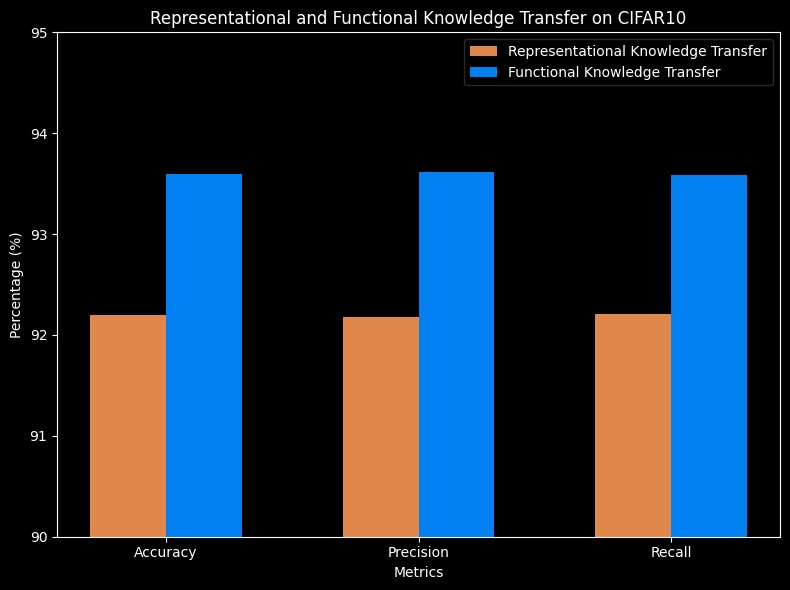

This approach allows us to harness the power of SSL without requiring vast amounts of data, making it effective even in low-data regimes. To evaluate the effectiveness of functional knowledge transfer, we tested it on three small-scale and diverse datasets: CIFAR10, Intel Image, and Aptos 2019. The results demonstrate consistent improvements in accuracy, precision, and recall, proving that our approach outperforms conventional methods, even in low-data scenarios.

While this approach to functional knowledge transfer in SSL has shown promising results, it opens up exciting avenues for further exploration.

To dive deeper into the details of this work, read the paper and watch the video on YouTube to learn more about how this method transforms SSL for low-data environments

Read Paper @ IEEE Xplore Read Paper @ Arxiv Source Code @ Github Watch Video @ Youtube Check Poster

P. C. Chhipa et al., “Functional Knowledge Transfer with Self-supervised Representation Learning,” 2023 IEEE International Conference on Image Processing (ICIP), Kuala Lumpur, Malaysia, 2023, pp. 3339-3343, doi: 10.1109/ICIP49359.2023.10222142.